[Page created in 2024 for translation of original content]

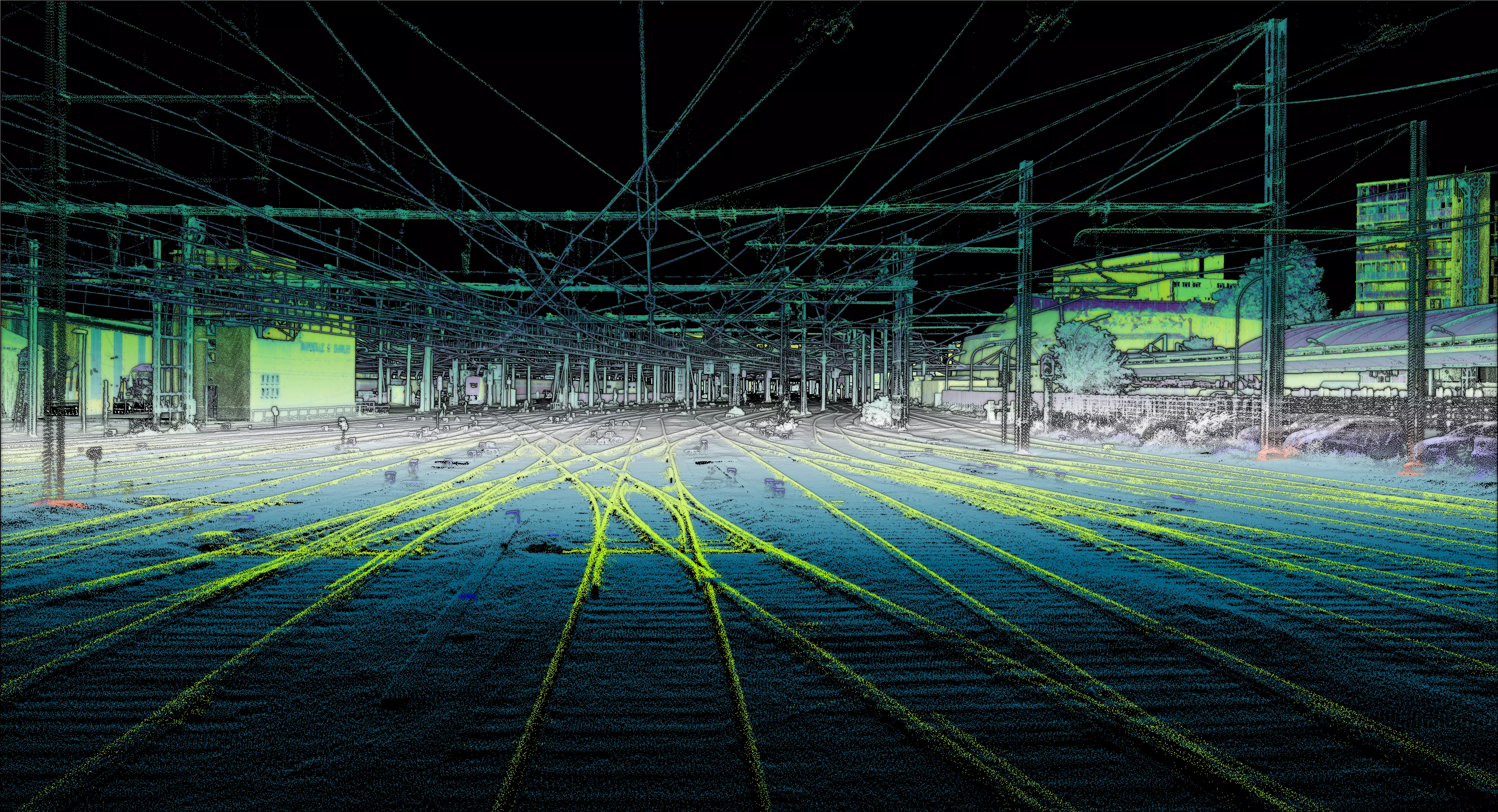

To maintain traffic flow, many maintenance operations that require direct intervention on the railway networks may take place at night. However, this poses visibility constraints and limits the intervention time.

To optimize efficiency, it seemed pertinent to develop a system that allows maintenance personnel to maintain a level of safety for equipment during the movement of construction machinery.

Indeed, similar to driving a car, we only have a partial view of all the surrounding elements due to blind spots.

During my internship, I contributed to the project of integrating artificial intelligence directly into embedded systems

with the support of my supervisor Quentin LEMESLE and Altametris' Data Science Program Manager, Anis CHAARANA.

One of the systems I worked on was designed to be placed in the driver’s cabin, enabling the detection of obstacles on the tracks through the combination of a camera, an embedded computer, and an artificial intelligence (AI) algorithm. Essentially, it is a complex system for warning and capturing images.

1. Prototyping the System: A Detailed Specification to Effectively Meet Railway Standards

As with any prototyping project, the first step in developing the embedded system is the creation of the specifications; this phase took place during the first six weeks of my internship.

A. A System with Components Sized for Its Use

Therefore, it was necessary for the system to integrate:

- A powerful yet energy-efficient onboard computer

- A camera with good vision both during the day and at night

- A small information screen

In addition to the components, the system had to be lightweight and slim to fit in most places, including the windshields of construction machinery.

B. A dedecated onboard computer for the project

Combined with the equipment, the power of the tool lies in its ability to perform real-time calculations with the computer.

We therefore compared different solutions from one of the largest manufacturers of image processing processors as they offer powerful and energy-efficient solutions.We tested two versions, more or less powerful, and finally chose the computer allowing processing of 6 images per second.

We also tested the tool's ability to operate in an enclosed environment with an outside temperature of 30°C to simulate real operating conditions in summer, monitoring the temperature of two components, the central processing unit (CPU), and the graphics processing unit (GPU).

The displayed temperatures are high but remain within acceptable limits as they do not reach the maximum temperature threshold and the system's performance is not affected.

The test was limited to less than 3 hours as we observed plateau values without variation during the last hour. The temperature value inside the casing was also satisfactory, reaching a plateau value during the test. As you can see in the graph below, this value follows that of the components:

Finally, in a context of an energy crisis, it was essential to take into consideration and test the electrical consumption of our system. We obtained a consumption of 28.65 Watts, which remains reasonable compared to a laptop computer that can range from 60 to 240 Watts.

We will therefore adapt our battery accordingly to ensure the best compromise between autonomy and portability of the final system.

C. A night vision camera

To address the initial problem of poor visibility at night, it was essential to pay particular attention to the choice of visual sensors. We needed to incorporate a high-resolution camera capable of providing a clear image feedback, especially in motion and under very low light conditions.

Indeed, just like with cameras, the larger the pixel, the more light it absorbs.

To be effective at night, it was therefore necessary to increase the size of the pixels.

We considered a technology opposite to the latest Samsung smartphone, the Galaxy S23 Ultra, and its 200-megapixel sensor.

While its pixels measure 0.64 µm, a seemingly tiny size, its computational setup actually reduces the resolution to obtain an image with larger pixels, like this:

![]()

Given our needs, we opted for a camera with an even larger sensor size but with a smaller resolution. With very large pixels, we would be able to capture maximum light.

Finally, to ensure a clear image in motion, we selected a sensor technology different from the most common models.

Ultimately, the camera will have a sensor capable of capturing information from all pixels at once. This method differs from that of traditional sensors, which capture the image line by line.

![]()

As illustrated above, on the left, the traditional sensor operates as follows: it records lines of pixels successively, resulting in a slight delay and thus deformation of the image when objects are in motion.

On the right, the sensor for the system. The difference is striking and demonstrates the reasons for our choice of this technology

The images obtained by end users will therefore be distortion-free and will provide better results thanks to Artificial Intelligence (AI).

2. A viable prototype of automatic detection, tested in real conditions

Once developed, we embarked on a testing phase to adapt the prototype to the evolving needs and constraints expressed by our clients.

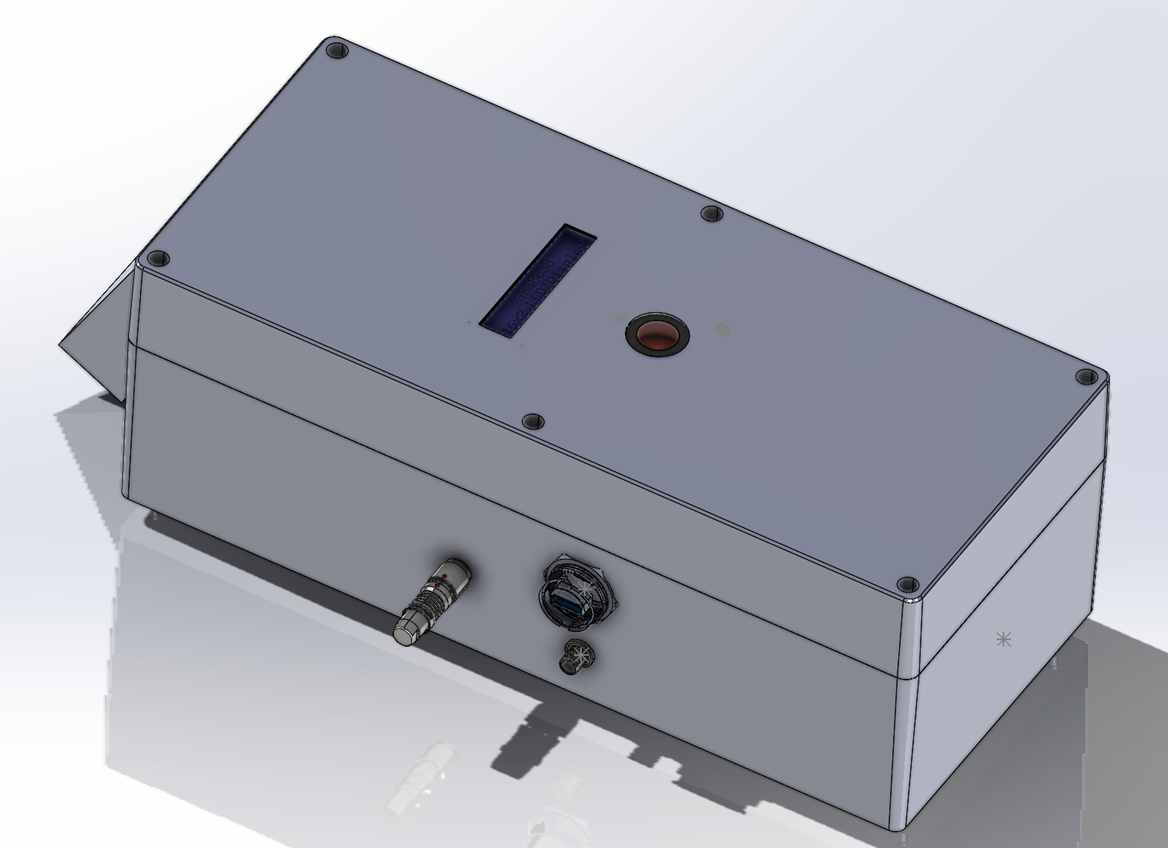

The initial prototypes were quite large and heavy (illustration below).

Non-contractual visual of the prototype of the onboard system

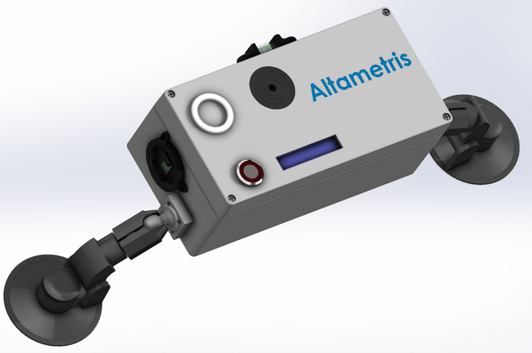

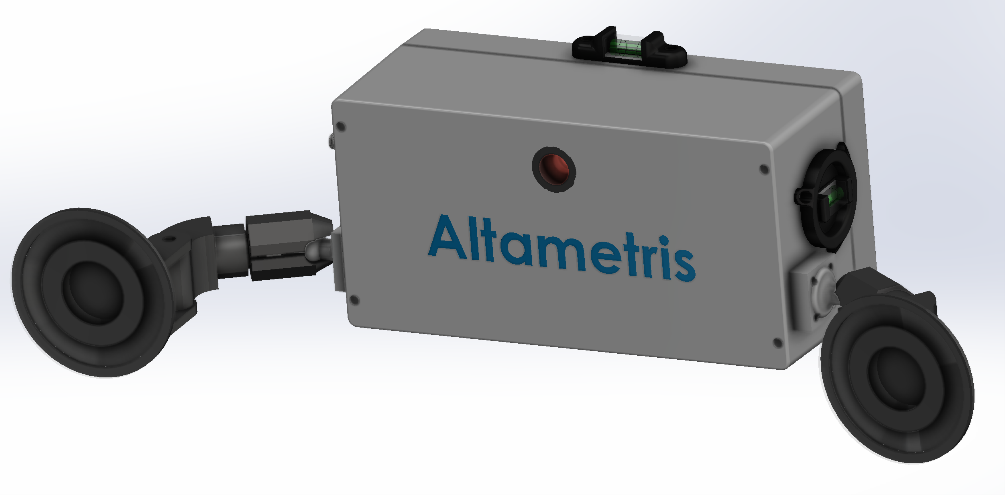

They were designed to be flexible and modular to integrate components for various uses. For example, assisting in shunting trains. Ultimately, due to the need to limit weight and bulkiness on the windshield of construction machinery, we reduced its size, developed its modularity, and its fixation system, as illustrated below :

Non-contractual visual of the prototype of the embedded system

Non-binding visual of the prototype of the embedded system

At the end of my five-month internship, the initial hypothesis was confirmed: our embedded system, which combines high-resolution sensors and Artificial Intelligence, helps secure nighttime maintenance operations. This is achieved through an audible and visual signal indicating the detection of dangerous obstacles.

At present, the system is in the development phase. It is likely that several iterations of the prototype will be necessary before it is fully operational. However, the fundamentals have largely withstood the test of experimentation and already meet the client's needs in its current state.

By summer, I believe it will already be possible to see new advancements in terms of functionality.

I would like to thank the entire Altametris team for welcoming me, sharing their knowledge, and allowing me to have moments of exchange outside of work and learn about the functioning of a human society. I am particularly grateful to all those who helped me during this internship, as well as those who assisted me with this project. In particular, I would like to thank Quentin Lemesle for allowing me to work on this topic, providing guidance, and helping me learn more while sharing his passion.

Altametris values not only data but also the skills of each individual.